What Meta Compute tells us about Meta's long-term AI plans

Meta is shifting from reactive sprint to marathon mode on AI data centres.

Meta today unveiled Meta Compute, which will lead Meta's expansion of data centres into the "tens" and "hundreds" of gigawatts. Here's what it's about.

The establishment of Meta Compute, a new internal organisation at Meta, was announced by Mark Zuckerberg in a Threads message earlier today.

The effort will be led by long-time Meta engineering executive Santosh Janardhan and former Safe Superintelligence co-founder Daniel Gross.

Here's my take on it.

Non-AI data centres

Meta has massively built up its AI data centres in recent years, with some reports citing as much as US$72 billion in capital spending in 2025 alone.

What isn't obvious to most people is that Meta already had a sizeable global network of data centres before generative AI became a thing.

In Singapore, Meta has a 150MW data centre. I was at the media announcement in 2018. I've long felt this was a mistake (more than 10% of Singapore's 2025 data centre capacity), but that's a story for another day.

Anyway, this global network of data centres serves as the backbone of Meta's extremely profitable social media platforms such as Facebook and Instagram.

Optimising resources

The massive AI data centre buildup at Meta has seen it scramble to deploy new data centre capacity for its multi-billion-dollar GPU clusters.

This includes the use of tents to house temporary data centre capacity, as it sought to deploy around 1.3 million GPUs by the end of 2025.

But with the requirements for AI diverging from those of traditional data centres, Meta is effectively building two types of facilities: AI and non-AI data centres.

I see Meta Compute as a move to better optimise the deployment of both types of data centres, as it shifts to a longer-term view of its AI data centre investments.

Indeed, Zuckerberg noted that Janardhan will be in charge of "technical architecture, software stack... and building and operating our global datacenter fleet and network."

Custom chips for the long haul

A "silicon program" was also mentioned, which I believe refers to efforts by Meta to develop its own GPUs, a trend happening with other AI giants from OpenAI to Google.

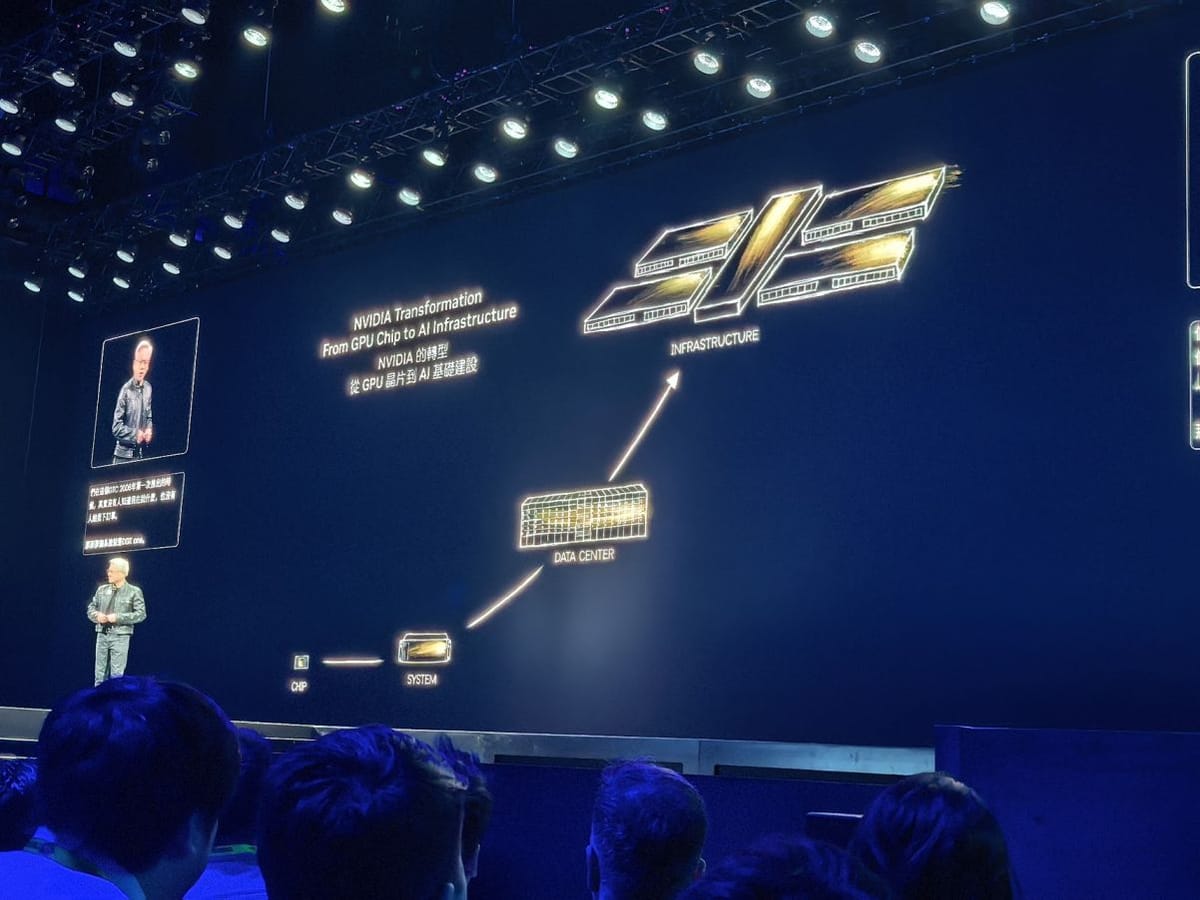

At the moment, Meta uses GPUs from Nvidia. Coincidentally, a data centre insider I spoke with today observed that the Nvidia ecosystem, while exceptional, is also very pricey.

In my view, Meta Compute reflects Meta moving from a reactive sprint to marathon mode as it commits to AI deployments for the long haul.