We need a lot more AI, says Jensen Huang

Shows off new products to further entrench Nvidia's role in AI.

"[When] we started our company, I was trying to figure out how big our opportunity was in 1993. I came to the conclusion Nvidia's business opportunity was enormous: $300 billion - we're going to be rich!" - Jensen.

We need more AI, and "accelerated computing" is the future. And oh, Nvidia is no longer a GPU firm, says Jensen Huang at this morning's keynote.

In his GTX Taipei keynote of exactly 1.5 hours at the Taipei Music Center, the charismatic CEO spoke to a packed hall about how Nvidia is now into AI infrastructure.

We will need a lot more AI

Unsurprisingly, Jensen thinks we will need a lot more AI for inference. The concept of inference-time scaling isn't new, and can be summed up as such:

- Reasoning by breaking problem into steps.

- Allocate more time to think more deeply.

- Create multiple answers; pick the best.

He pointed out how a unit of the upcoming Nvidia GB300 server is "as powerful" as the Sierra supercomputer of 2018 in PFLOPS, or floating-point operations per second.

Depth of capabilities

Nvidia is ideally positioned for accelerated computing, says Jensen, with its unique stack of hardware, system software, and libraries.

"What makes Nvidia really special is the fusion of these capabilities, especially, the algorithms - the libraries - what we call the CUDA-X libraries."

This stems from the observation that small parts of software consume most of the runtime. Nvidia hence created CUDA in 2007 to offload them to GPUs.

Over the years, the team went after one domain after another, creating additional drop-in libraries for:

- Numerics.

- Data science.

- Deep learning.

- Signal processing.

- Computer graphics.

- ... and many more.

The result is a deeply entrenched advantage that no competitors have come close to touching.

Nvidia RTX Pro Server

Jensen also showed off the Nvidia RTX Pro Server, which he bills as an "enterprise-grade" platform to bridge the gap between CPU-only servers, and AI-centric data centre deployments.

Each server comes with:

- 8x RTX PRO 6000 Blackwell GPUs.

- CX8 control plane at 800GB/sec.

- BlueField-3 DPUs.

I did a back-of-the-envelope calculation, and a rack of RTX Pro Servers would probably add up to 25kW to 50kW per rack. Definitely much easier to deploy in existing enterprise data centres.

I'll share more from Jensen's keynote in another post later this week - I'll add a comment here when it's out. You can "like" this post for LinkedIn to notify you.

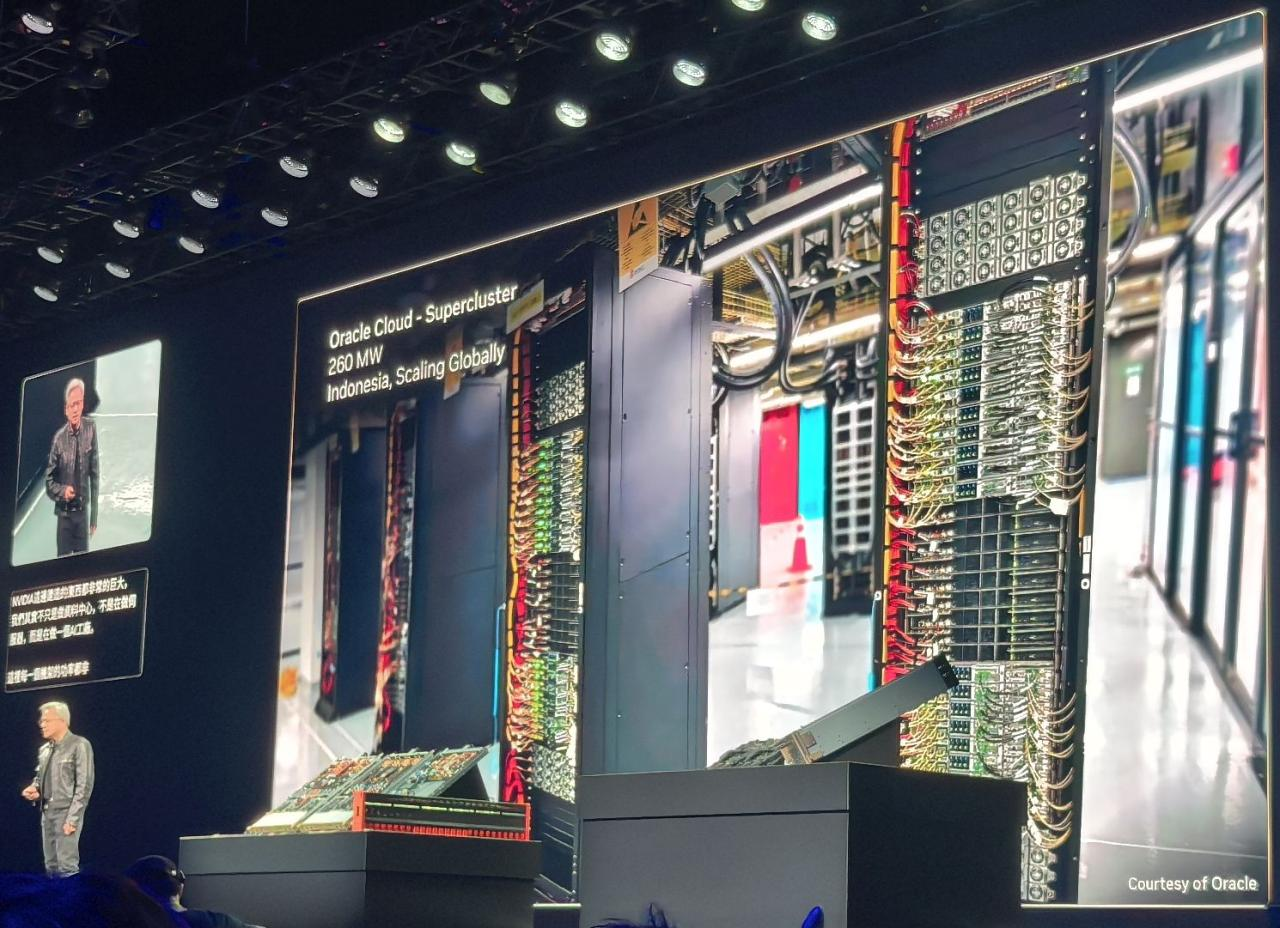

Caption: (Right) If you are from Indonesia, you'll be interested in this photo.