Vera Rubin and chillers: What Huang actually said

Cooling is complex. Huang's comments only apply to Vera Rubin-only deployments.

Last week, shares of certain data centre cooling brands fell after Nvidia's Jensen Huang said Vera Rubin GPUs won't need chillers. Actually, nothing changed.

I found the market reaction fascinating because the upcoming Vera Rubin has the same inlet water temperature and flow rate as current Grace Blackwell chips.

The story so far

At his CES keynote last Monday, Huang said a server rack running the company's new Vera Rubin chips "doesn't need a chiller" to work.

This prompted shares of Johnson Controls, Carrier, and other HVAC companies to fall at market open the next day, though most largely recovered later in the week.

Huang said: "At 45 degrees Celsius, the data center doesn't need a chiller. We essentially used hot water to cool this supercomputer, with incredibly high efficiency."

While I secured a slot for the keynote, a scheduling conflict meant I ultimately couldn't make it. I only read about it later. Let's break it down.

Staying cool

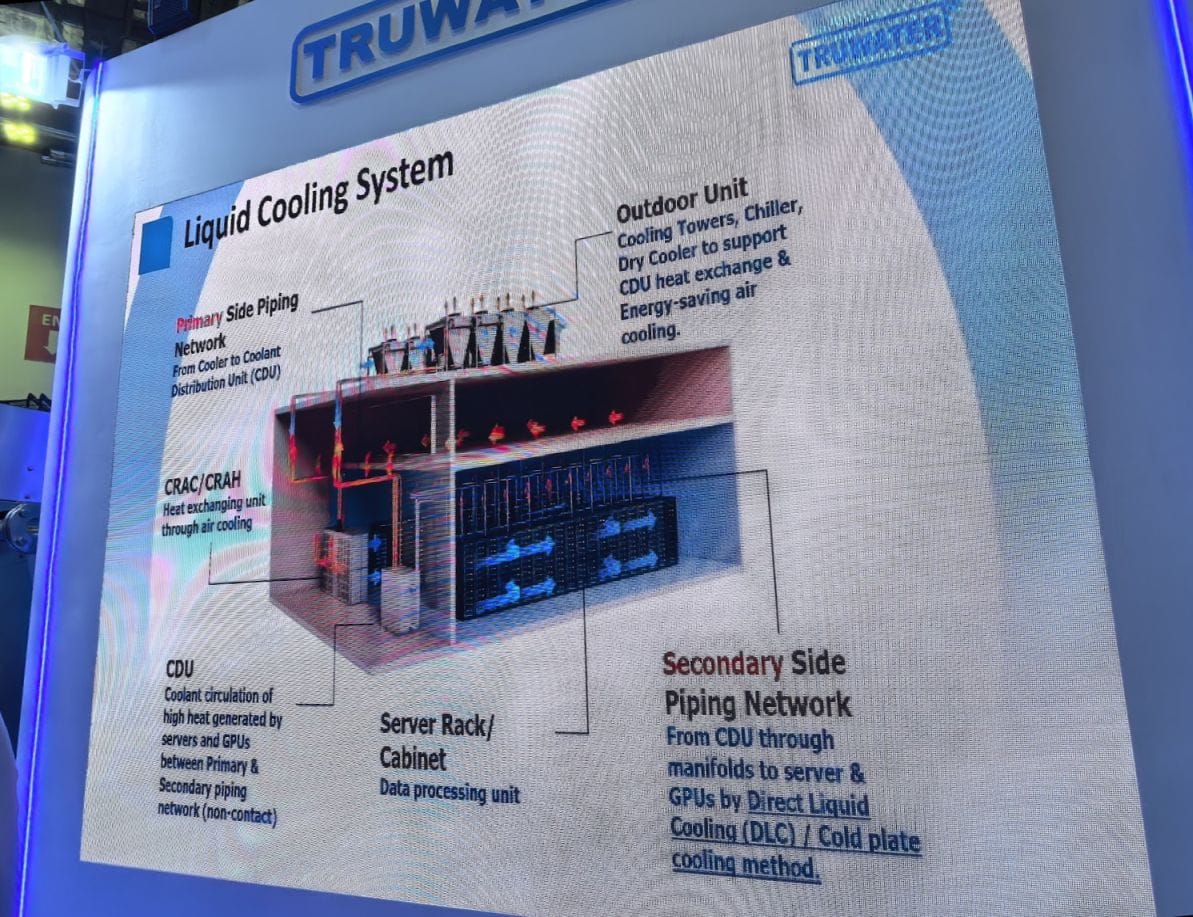

Like Grace Blackwell, Vera Rubin is mainly cooled with direct-to-chip liquid cooling, which sees liquid piped through cold plates within each GPU server.

Because liquid cooling removes heat so efficiently, the incoming (inlet) liquid doesn't have to be as cold. In fact, both GPUs will work with inlet temperatures of 45°C.

This aspect of liquid cooling isn't something that's well known.

Air-cooling systems in the form of CRAH units require a lower inlet temperature. The cold water runs through a cooling coil; a fan blows air across and into the room.

Photo Caption: (Left) SPLC at the STDCT in NUS. (Middle, Right) Illustrations showing cooling deployments.

Cooling is complex

While the need for cooling in data centres is conceptually easy to understand, actual cooling deployments are a lot more complex.

In hot and tropical Southeast Asia, data centres typically use both cooling towers and chillers to bring inlet water temperatures down to the required range.

It's not a one-size-fits-all approach, though.

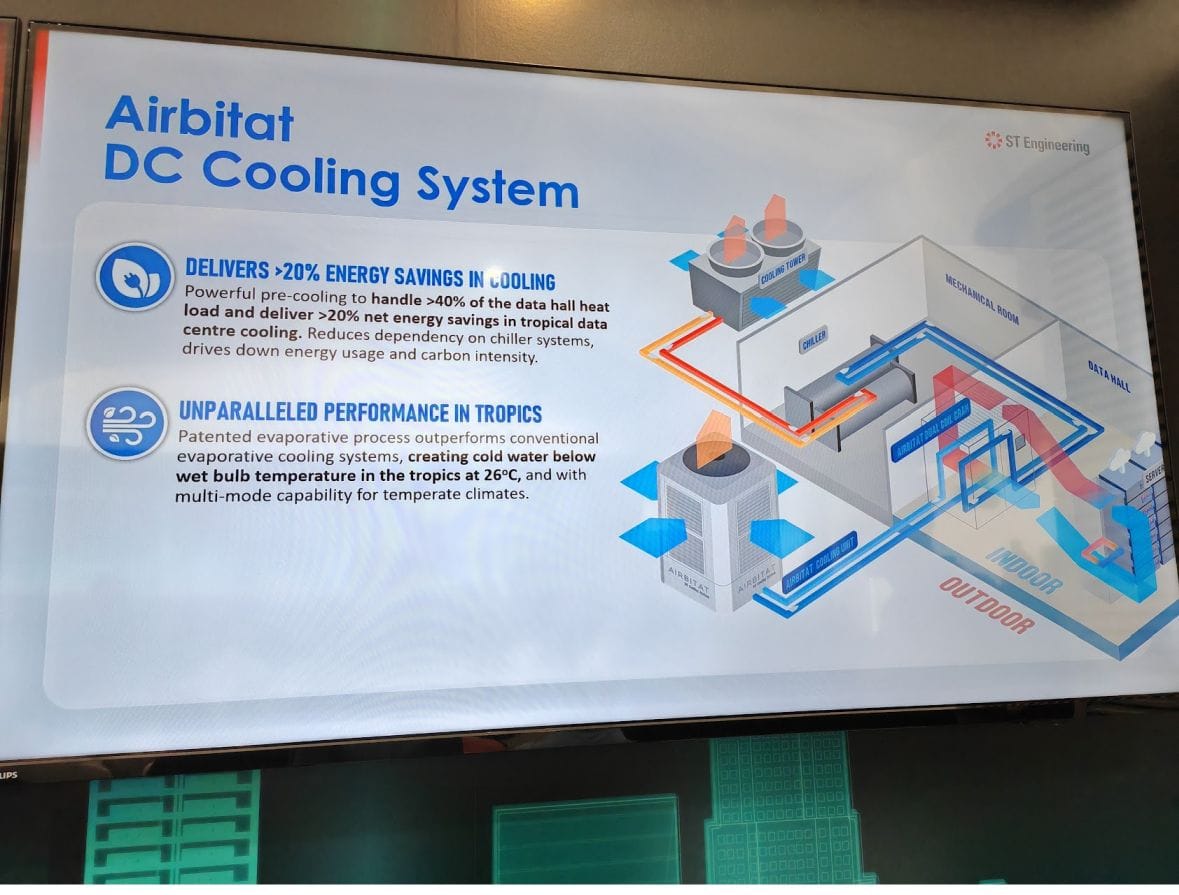

ST Engineering has an "Airbitat" data centre cooling system that it will deploy at its ST Engineering Data Centre @ Boon Lay to reduce the load on chillers.

SMC's immersion-cooled AI deployment at STT GDC's STT Singapore 6 data centre taps into the returning (heated) condenser water supply.

Meta's massive 150MW data centre in Singapore uses StatePoint Liquid Cooling (SPLC), which offers a more water-efficient approach.

Actual deployments can be quite different depending on data centre design, workloads, efficiency targets, and environmental conditions.

Finally, it's worth noting that the vast majority of data centres are not running GPUs. Huang's response is only applicable for Vera Rubin-only deployments.