Need for cool: Nvidia's latest AI GPU needs liquid cooling

Are Nvidia's latest Blackwell B200 AI GPUs too hot for current data centres to handle?

Are Nvidia's latest Blackwell B200 AI GPUs too hot for current data centres to handle?

Earlier this week, I wrote about Nvidia's new GPUs.

Today, I want to explain the possible implications of the new GPUs on today's data centres.

🆕 The new GPUs

A quick recap of the new GPUs:

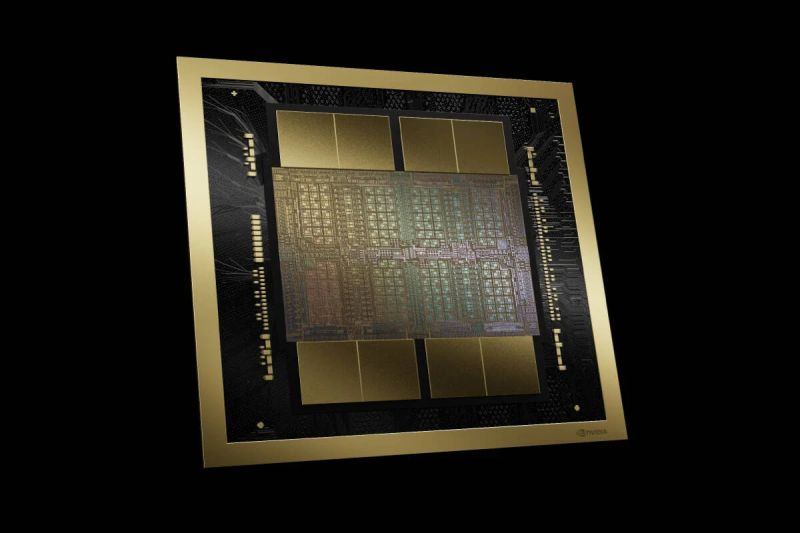

- B200 GPU: Powerful 1,000W GPU.

- B100 GPU: Slightly toned down 700W GPU.

- GB200: Combo 2x B200 with a Grace CPU.

I didn't elaborate then, but here's how the various products fit together.

- Powerful new flagship GPU.

- For use in systems designed for older H100s.

- Deployed in the GB200 NVL72 supercomputer.

* New reports have emerged that the B200 GPUs in the GB200 Superchip will run at up to 1,200W each, instead of 1,000W - likely achievable only with water cooling.

🥵 Why head is an issue

Traditional data centres rely on air-cooling at the rack level. In a hot aisle/cold aisle layout, chilly air is circulated to the cold aisle.

Servers are positioned such that cold air is drawn in from the front with powerful, high-speed fans. The resulting hot air is then expelled from the back.

As servers got hotter, the number of fans steadily increased, as did their rotational speed, which can now reach multiple 10,000s of RPMs.

Then GPUs came along, with far higher power consumption and heat output than mere CPU-only servers. It's getting hard to rely on air-cooling only:

- Older data centres can't generate enough cold air.

- Power draw of the fans needed is rising non-linearly.

🔀 Liquid cooling

One solution Nvidia suggests in its older deployment docs is to space out GPU servers, in some cases leaving as many as 2 slots empty for each server installed.

But the B200 GPU will consume 40-70% more power than the H100. Clearly, we can't keep spacing them further apart.

Let's talk liquid cooling.

On paper, liquid cooling should be easy to deploy in a data centre. After all, all data centres have massive, centralised cooling systems.

Instead of using the chilled water produced to cool the air via heat exchangers (air-conditioning) units, why not just pipe this water all the way to individual servers?

The problem:

- It's costlier to install and maintain.

- Possibility of water spillage is non-zero.

- There is no broadly accepted standard for it.

In a nutshell, it might be some time before we see the widespread use of liquid cooling in data centres.

Will this impact its take-up rate? Only time will tell.