Nvidia launches Blackwell AI GPUs

Nvidia just unveiled its next-gen Blackwell GPUs for training trillion-parameter AI models.

Nvidia just unveiled its next-gen Blackwell GPUs for training trillion-parameter AI models.

To be clear, existing GPUs are perfectly suited for training massive AI models of over a trillion parameters.

But Nvidia says its new GPUs can do it faster, which translates to needing fewer GPUs and a much lower power budget.

According to Nvidia:

- Train a 1.8 trillion parameter model with 1/4 of GPUs.

- Instead of 8,000 older Hopper GPUs, 15MW.

- Just 2,000 Blackwell GPUs, 4MW.

GPT-4 is understood to consist of 1.76 trillion parameters.

🌠 New GPUs

The new B200 Blackwell GPU uses the "chiplet" design popularised by AMD's server chips, combining 2 separate chips into an integrated GPU.

Each GPU has 208 billion transistors, with support for 192GB of HBM3E RAM. For reference, the (now) older H100 is just 80 billion transistors.

The variants:

- B200 GPU: Powerful 1,000W GPU.

- B100 GPU: Slightly toned down 700W GPU.

- GB200 Superchip: Combo 2x B200 with a Grace CPU.

🚅 Supercomputer in a rack

Nvidia also showed off its Nvidia GB200 NVL72 supercomputer, which I found particularly intriguing.

- Start from a two-rack baseline.

- Up to 18x GB200 compute nodes.

- Liquid-cooled with snap-in connectivity*.

- 5th-gen NVLink for over 1 PB/s total bandwidth.

*I assume pipes are also needed for liquid cooling. FYI, reports cite 25°C liquid in, 45°C out.

Am really keen to dig into the technical/deployment docs for this.

🌊 Bring in the liquid cooling

Regardless of your opinion about the state of liquid cooling in data centres, there's no running away from reality: Liquid cooling is now mandatory for cutting-edge AI.

A few weeks back, I wrote about my private tour of SMC's incredible full-immersion AI data centre at STT GDC's STT Singapore 6 DC. https://lnkd.in/g9YiJ4ux

I imagine they can swap in the new B100 GPUs (700W) or perhaps even the B200 GPUs (1,000W) into the servers without too much trouble.

What do you think? Will AI finally push us to deploy liquid cooling in data centres in earnest?

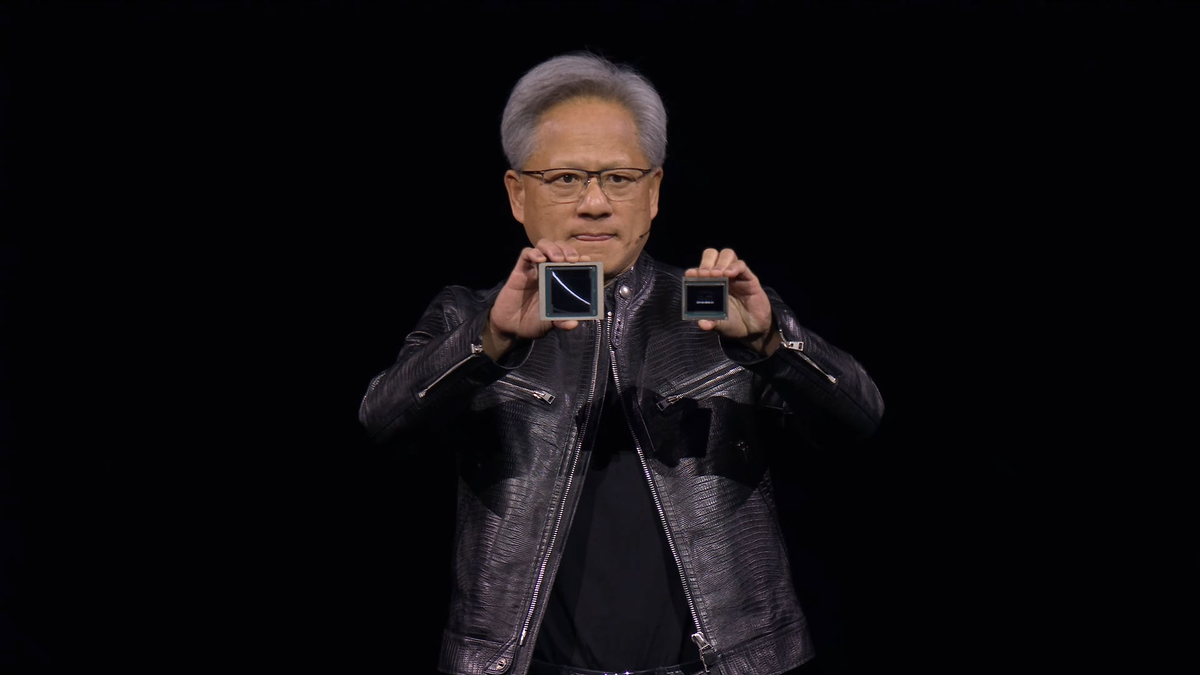

Photo Credit: Nvidia (B200 on the left; H100 on the right)