MIT Study: Using AI to write weakens writing skills

And remember less of what they submitted.

People who write using AI end up using it as a clutch and producing generic stuff, according to a new study. And using AI to write results in "cognitive debt".

In today's UnfilteredFriday, let's look at the impact of AI on our writing.

Cognitive debt

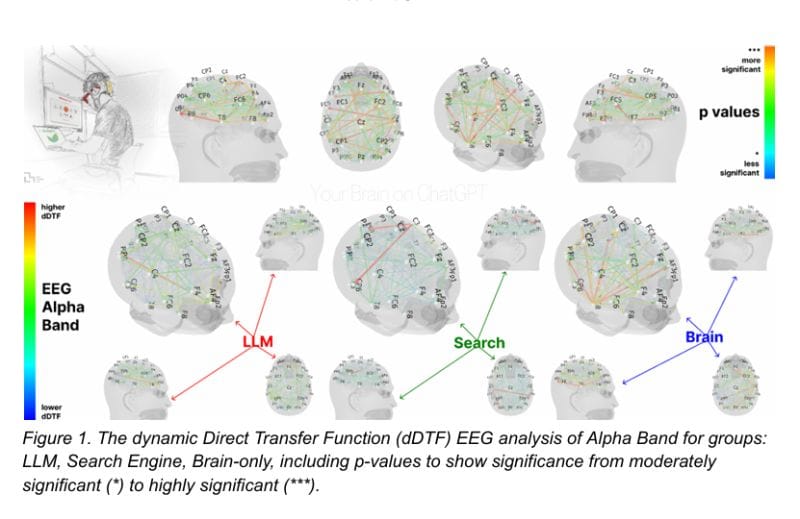

A new study looked at the impact of LLM use by dividing 54 participants into three groups: LLM group, Search Engine group, Brain-only group.

They are then asked to write an essay using the respective tools.

An EEG was used to record brain activity, NLP analysis was performed, and each participant was interviewed after each activity. Their work is also scored by human teachers and a specially built AI agent.

After four sessions, here's what the study concluded about the LLM group:

- Fell behind in ability to quote from article.

- Brain connectivity systematically scaled down.

- Performed worse at all levels: neural, linguistic, scoring.

When their essays are scored, those written with the help of LLMs are far more generic.

The problem with AI

Some of the problems with AI dependency are well known, though the study proves some of them. Two things jump out at me.

We anchor to the first idea

The fact that many LLM essays turn out generic isn't surprising. You see, our brain tends to anchor to the first idea we come across.

This means even rewritten AI content will invariably be "AI-tinged". The result?

- Generic AI ideas will influence what we write.

- Problems are not thought through as deeply.

- We stop developing our writing ability*.

*And as I always say, writing is thinking.

The time cost that made writing matter

Another issue is how time is often used as a signal for the things we write. The effort - and time taken, serves as an indication of the value of the written word.

- White papers.

- Recommendation letter.

- Thought leadership essay.

But today, it is possible to churn out dozens of pages of write-up at the push of a button. So just having copy that's free of grammatical errors no longer mean anything.

Personally, this annoys me the most: When I read through error-free yet poorly argued content - before realising it's AI written.

And now a study confirms that the author probably cannot remember half of it.

A matter of how we use AI

To be clear, I use AI every day - from ChatGPT, Claude, Gemini, to Perplexity. However, I do not use it wholesale for my LinkedIn posts - or anywhere else for the matter.

So, how one uses AI matters - a lot. The problem, of course, is we cannot control how others use it.

Maybe the real issue is this: when we no longer struggle with words, we also stop grappling with ideas.