Meta squeezes Nvidia's 120kW monster into air-cooled data centres

Meta's Catalina rack design makes 120kW Blackwell GPUs work in 20kW air-cooled facilities.

Meta last week showed off a rack design that will let Nvidia's 120kW Blackwell GPU systems run in an air-cooled data centre.

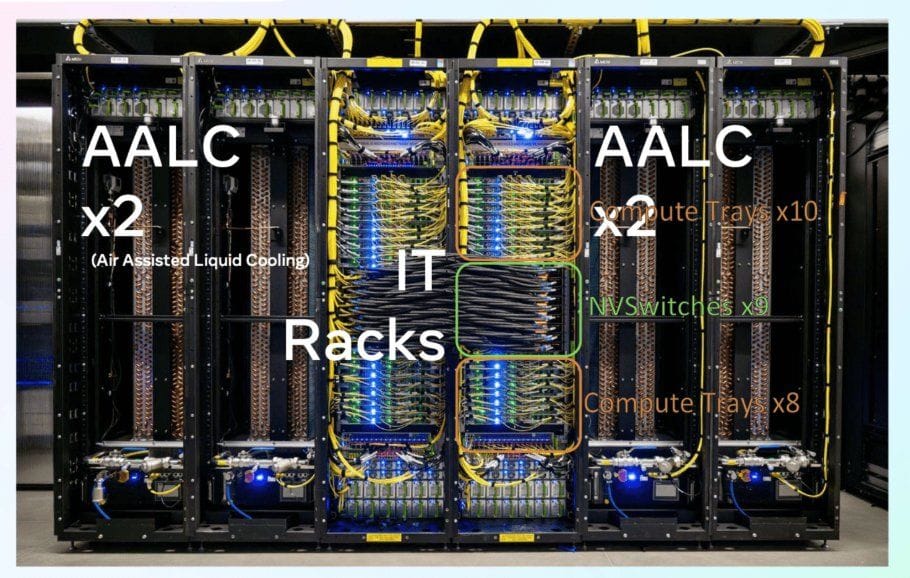

Meta's "Catalina" Open Compute rack first debuted last year, and the latest adaptation for the Blackwell NVL36x2 was shown at the Hot Chips conference. The NVL36x2 system itself requires 120kW over two racks, but Meta says its system will work in air-cooled data centres that support 20kW racks.

Liquid-to-air CDUs

How did Meta pull this off? Short answer: by spacing things out across six racks and using liquid-to-air "side pods." I just call them liquid-to-air CDUs.

Here's how it looks: CDU | CDU | GPU | GPU | CDU | CDU

In Meta's instance, the four CDUs on both sides deliver 80kW (4x20kW) of cooling, while the GPU servers themselves cool the remaining 40kW through air-cooling.

As I wrote previously, a liquid-to-air CDU takes the liquid from a direct-to-chip GPU server and cools it down using cold air from the data centre itself. These CDUs can be deployed within older, low-kW data centres, facilities that prohibit hot welding works, or when existing pipes don't offer adequate flow rates.

The photo below shows a MiTAC air-to-liquid CDU capable of 100kW. It's basically a cooling machine jam-packed with fans and heat exchangers.

How others are doing it

As I've previously mentioned, it's relatively easy to deploy high-powered GPUs within traditional data centres, as long as they're spaced out. In some deployments I've heard of in Singapore, an entire rack could host just one or two units of H200 GPU servers, allowing even old data centres to support AI workloads.

Of course, this strategy goes out the window when high-density deployments with thousands of GPUs are involved. However, only intensive state-of-the-art AI training requires so many GPUs.

The real takeaway

Meta's approach is clever engineering that solves a real problem: most existing data centres can't handle the extreme density of modern AI hardware. By spreading the load across six racks instead of two, they're making Blackwell accessible to facilities that would otherwise be left behind.

This matters because it challenges the narrative that AI workloads require brand-new, purpose-built facilities. Personally, I think Meta probably designed this solution to fit AI processing in some of its older data centres with ample space but not the power density.

As I wrote last week, enterprises and most organisations with limited AI workloads will probably find 40-50kW more than adequate for future-proofing. And as Meta's Catalina design proves, creative solutions can bridge the gap when you need more, without rebuilding your entire infrastructure.