Heavy AI users are the best at spotting AI use

Those who don't use AI at all have no chance.

Do you AI? Users who use AI heavily can detect its use with astounding accuracy, says a study. But those who don't use AI at all have no chance.

Writing with AI

Do you write with AI but don't want people to know? If you do, you are likely aware that automatic AI detectors are no good.

They suffer from various problems:

- Low detection rates.

- High false positive detection rates.

- Poor reliability even with light paraphrasing.

Can humans fare better? Turns out they can, with a catch.

Can humans detect AI?

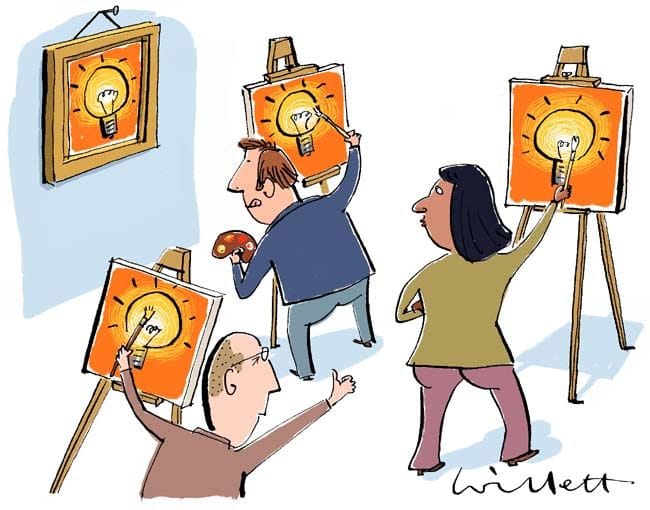

In a study published earlier this year, human annotators were tasked to read 300 non-fiction English articles, classifying them as human-written or AI-written.

To up the challenge, state-of-the-art LLMs such as GPT-4o, Claude 3.5 Sonnet, and O1-Pro were used, as well as evasion techniques such as paraphrasing and humanisation.

The result?

A small group of 5 that frequently use AI for writing tasks did exceptionally well, outperforming all commercial and open-source AI detectors, misclassifying just 1 out of 300 articles by majority vote.

On the other hand, annotators who say they don't use AI fared poorly at detecting AI-generated text, attaining a similar rate to random chance of 56.7%.

Common giveaways

What do the LLM users focus on that made them so good? Based on their annotations, some common giveaways are:

- Overused AI vocabulary.

- Formulaic sentence

- Document structure.

- Low originality.

- Factuality and tone.

Note: Neither paraphrasing nor humanising effectively removes all of these signatures.

Turns out there's a science to why I could "see" AI-created content quite so clearly since using it regularly.

Why we hate AI-written stuff

The study also validates why I've never used AI to write an entire piece, despite using it daily - it takes so much effort to fully mask an AI-written article that it's easier to write them from scratch.

Of course, the study noted that evasion tactics are still underexplored. It’s entirely possible that future advancements could outwit even expert users of LLMs.

But why do we dislike AI-written stuff so much? I've given this a lot of thought. In my view, the issue isn’t that it’s AI-generated.

It’s that while AI makes writing effortless, readers end up paying the price when they come for insights but often end up with something generic.

This gives AI-written content a bad rep. So when we spot tell-tale signs of AI, we immediately dismiss it - even if it contains genuine insight.

What do you think?