Future proof with AI: Why humans still matter

Why humans remain irreplaceable in the AI era.

Will AI ever replace humans? What are some top prompt engineering tips? And how can AI search solve enterprise headaches?

I attended a workshop hosted by AI Singapore and Elastic this afternoon named "Future Proof with AI" and the consensus from the experts was clear: AI won't replace us, but it's fundamentally changing how we work.

Here's what I learned.

AI in action

Remember PM Wong's National Day Rally speech about Q&M Dental Group using AI to analyse X-rays and help dentists diagnose problems? Well, it was part of the 100 Experiments (100E) programme initiated by AI Singapore, says Laurence Liew, director of AI Innovation.

The programme co-creates AI products and solutions for the industry, deploying one AI engineer and three AI apprentices per project. The solution deployed at Q&M Dental Group took seven months to develop.

In a panel discussion with Elastic's Sanjay Deshmukh, Laurence observed that AI projects before ChatGPT were all based on machine learning, focusing on areas such as computer vision, anomaly detection, and predictive modelling. In contrast, 80% of the projects at AI Singapore today revolve around generative AI and LLMs, underscoring the profound impact of generative AI.

Just a predictive machine

But if you're thinking that humankind will be left jobless and destitute, Laurence has this to tell you. An LLM works by looking at hundreds of tokens and predicting the next most likely token.

"Whatever the LLM is generating, it is not factual. It's based on probability. And the probability for a token could be 70-80%. Multiplied across the whole sentence..."

This means humans still need to evaluate the correctness of the output and apply their domain expertise. As Laurence puts it: "You must have very strong foundational knowledge when you work with LLMs."

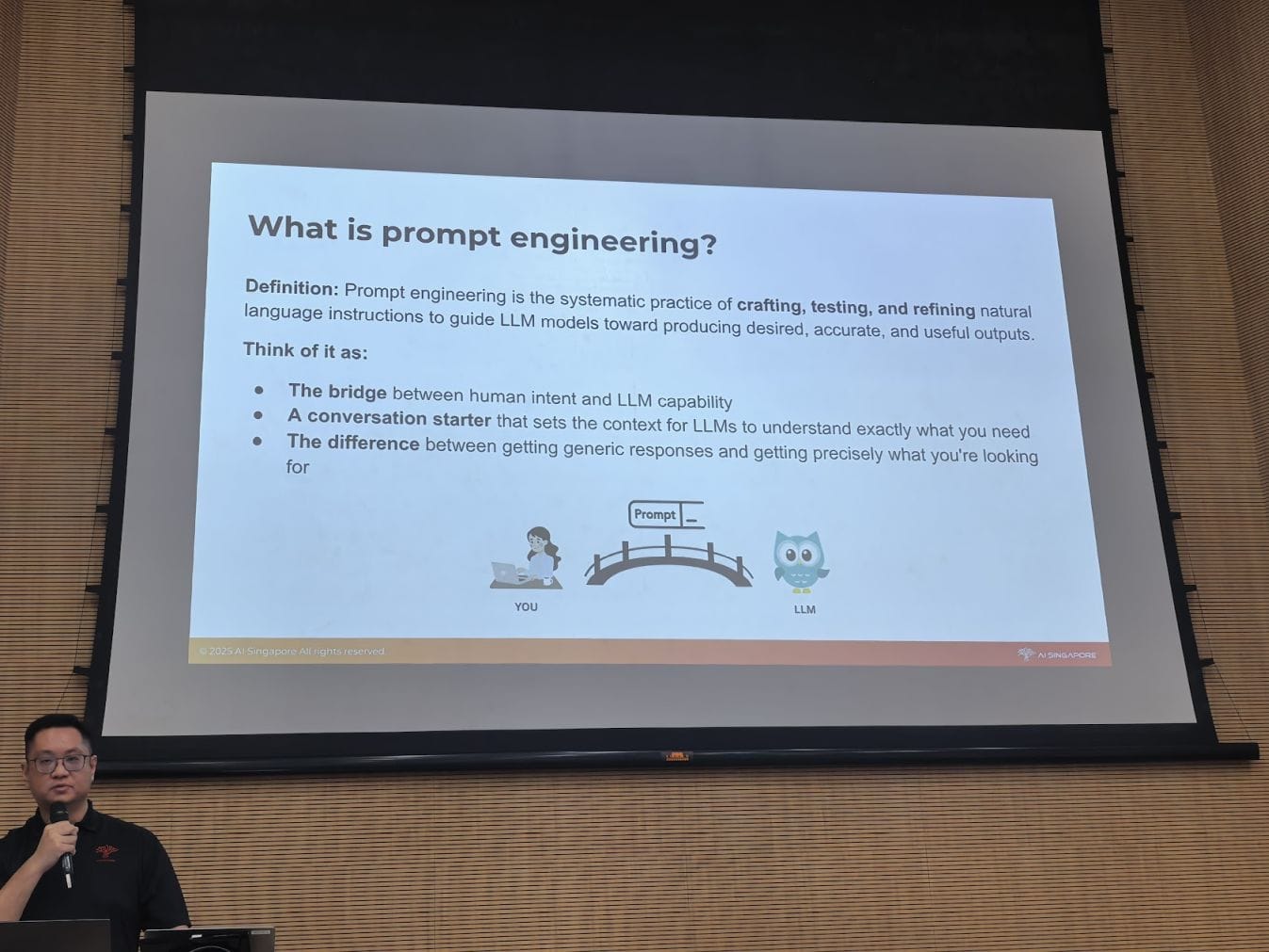

Prompting techniques

In his presentation, Meldrick Wee, lead AI engineer at AI Singapore, outlined a range of prompt engineering techniques and offered suggestions on crafting good prompts.

Some prompting techniques include Chain-of-Thought, Query Decomposition, Prompt Chaining, and Structured Outputs. I don't have space to outline everything, but two things stuck with me.

Two things stuck with me from his presentation. First, start simple and be specific. The more precise your prompts, the better your results. Second, mind your context window. Repeat vital instructions at the end to ensure they're not forgotten as the model processes your request.

Why we need AI-powered search

In the final segment, Han Xiang Choong, senior customer architect at Elastic, shared his experiences on his generative AI journey and how it solved a particularly daunting problem at his previous organisation.

In a nutshell, his team was tasked to build a solution to address extreme customer service centre workloads. Traditional AI/ML approaches didn't work. Building an LLM from scratch resulted in too much hallucination.

So, what worked? In the end, the team built an AI-powered solution that substantially improved the capabilities of customer service agents. The result was much faster case resolution time and happier customers. And yes, humans are still very much needed.